#10 - Frontier Tech - The Great Month of ML

From open stable diffusion (SD), human level speech detection accuracy, new SOTA open CLIP models, Runway's text to video, SD + Unreal character generation, to productizing the new era of ML.

Thanks for holding as I paused last week, and made a small personal announcement :)

This week I decided halfway through editing that I needed another special edition entirely devoted to ML - large language models (LLMs), image generation, and general tooling progress as it’s shot forward at a blistering pace. If this is up your alley, scroll on, if not - will be back to the regular cadence next week.

ML Research Developments

8.22.22 → We started out with the public release of the stable diffusion model by the Stability AI folks (almost exactly a month ago … wild).

9.9.22 → New state of the art (SOTA) open CLIP models to drive image classification and generation forward (”Large Scale Open Clip” from the folks at LAION, supported by Stability AI). CLIP models are embedded into DALLE2, Stable Diffusion, and Midjourney’s generative models.

9.7.22 → On the auto-code production side, Github ran a study that showed a 50% decrease in time to write a web server in JavaScript when using GitHub Copilot (which turns natural language prompts into code suggestions).

9.9.22 → Runway dropped perhaps one of the sickest beta teasers** of all time, demo-ing text to video features that span importing background footage, removing background objects, generating background images, green-screening, and more that will be deployed soon in beta. (**We are investors in Runway).

9.14.22 → Adept dropped their ACT-1 Transformer for Actions which they trained on a number of complex software tools such as Salesforce or Excel that typically also require complex navigation ability. Adept’s query box beautifully allows natural language voice or text to command complex tasks in Salesforce, Excel, etc and also takes feedback.

9.11.22 → Run stable diffusion locally on your mac with Diffusion Bee.

9.17.22 → Six days later, this guy also created a way for you to run stable diffusion locally on your mac. No internet, rate limiting, or account necessary.

9.19.22 → Interesting use case of both stable diffusion and CLIP: reverse image search. Lexica.art enables you to input any photo and come up with stable diffusion generated similar photos, using clip-ranking/retrieval.

(As the creator notes, this is particularly helpful for finding images where knowing the appropriate natural language query / successful CLIP prompt is hard. Think: you want to generate a specific texture or an abstract organic shape. Image retrieval, and also general image sorting in latent space is increasingly important but hard to do swiftly [One example might be that you want a group of images that all are similarly inspired, but hard to describe - ‘clothing where the texture is inspired by or visually similar to grass,moss, or dirt’ would be a possible set of images that you might want to prompt.] I’m certainly excited the applications that come out of this.)

9.21.22 → Open AI’s dropped Whisper, an open-sourced neural net that achieves human level robustness and accuracy on English speech across accents and technical language and it can run locally! Descript watch out!

9.21.22 → A decentralized platform for running and fine-tuning 100B+ LMs. Currently self-hosting / hardware requirements are still a huge barrier for the layman to get involved in open-sourced language models, Petals provides an alternative. I’m super intrigued to see the rewards systems and secondary market that will crop up to incentivize GPU sharing, and would love to talk to anyone who’s thinking about this.

(The team states in their FAQ that they’re working on introducing incentive points for people who donate GPU time to swarm training that will be redeemable for fine-tuning with higher priority, for example. Public swarm will be launched in November 2022. In the meantime, you can launch and use your own private swarm, or

fill in this form to donate some GPU time.)

Also CLIP-Mesh generates 3D models from text prompts (9.7.22), Anthropic AI released their polysemanticity paper (9.14.22), Character AI released a beta of their chat charachters you can play with (9.16.22), and this researcher demonstrated reverse searching Instagram images to find the live camera feeds that show when an Instagram user took their photo (9.12.22).

Productizing New ML

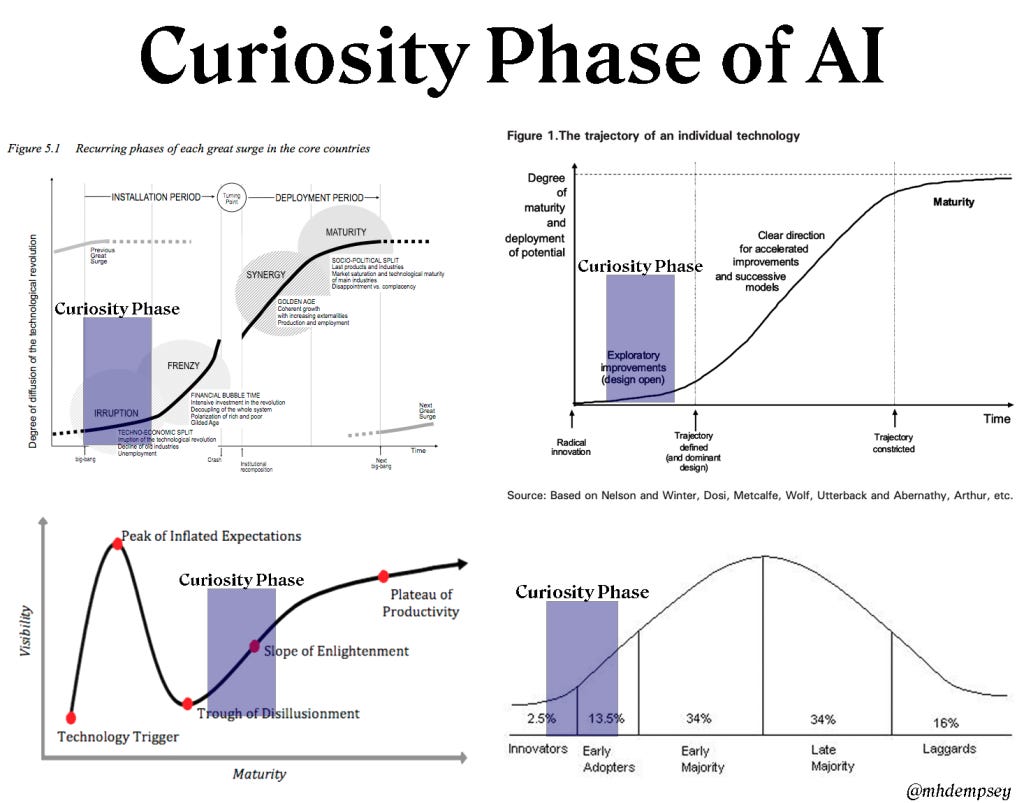

Company Building in the Curiosity Phase of AI (Mike Dempsey, 9.20.22)

On navigating GTM, product, and defensibility -”When thinking of GTM, AI-first companies should likely be targeting the highest-value incumbent users, or the “new professional” users that did not exist or were previously unable to make a living in their given role.” (Mike lays out the details of these two user groups within)

Productizing Large Language Models (Replit, 9.21.22)

Great notes on quality assurance, performance, prompt engineering, and deployment & monitoring from the many LLMs Replit has deployed.

Generative AI: A Creative New World (Sequoia Capital, 9.19.22)

A comprehensive view on the three eras of generative AI, landscape now, and application design.

Productization of new ML Models (9.13.22)

Sarah hits the nail on the head in terms of the challenge of productizing new models for a repeatable & discernable user group— “Many "AI for X" companies failed in the past 5-10 years, not because their technology was not good enough, but because they could not find product-market fit.”

Bonus Round:

9.15.22 → Generated fluid architecture using DALLE 2 + Runway for fluid frame interpolation between images to create a lovely imaginative architecture video. (click into the thread for process walkthrough)

9.12.22 → Stable diffusion + Unreal engine driven character creation and refining.

Lastly, I’m on the lookout for text to 3-D character animation. This seems inevitable as 3-D modeling + generative tooling get better, and as we layer natural language prompts on top of this infrastructure.

I’ve written before about how important game engines like Unreal and Unity are as a software primitive, and how they’ll evolve and inform other software in the future, but I’m very excited to see this generative content layer plus natural language querying allows the layman to access the depths and wonders of mastery of a game engine like unreal.

Frontier tech jobs:

Metals: Magrathea

Infrastructure/Fintech/Agriculture: Ambrook

Machine Learning / Creative Tooling: Runway ML

If you’re interested in an environment where you watch ML research get productized, I can’t recommend highly enough that you apply to Runway.

Chemical Engineering / Biology: Helaina, synthetic breast milk

Neuroscience / BCI: Science.Xyz (Neuralink spinout): https://science.xyz/careers/

See details on what the team is working on here

Biology / (CV/ Embedded Systems) Engineering: Spaero, lab automation

Energy / Ocean Tech: Stealth

Biology: Culture Biosciences, cloud-based bioreactors

Biology / Mechanical Engineering: Bionaut, precision medicine delivery through remote controlled nanobots

Healthcare: ARPA-H, Project Lead, help spin up the newest Advanced Research Projects Agency focused on healthcare and scaled biomedical research.

Biology: Convergent FROs, small agile organizations that aim to fill a gap in the translational science landscape.

Focused Research Organizations (FROs) undertake projects too big for an academic lab but not directly profitable enough to be a venture-backed startup or industrial R&D project. Think "Series-A-sized org whose product is a public good to revolutionize a scientific field".

Crypto: Volt Labs, work on crypto projects for the research arms of crypto-focused Volt Capital.

Gaming & Education: Hidden Door

Request for frontier tech help.

Looking to bounce around ideas about technology in the space? Looking for a cofounder or team member, or want to submit a job description to be featured in my newsletter? Trying to join a new frontier tech startup? Fill out the form below and let me know what’s on your mind. I’ll do my best do respond with an answer, suggestion, company, or meeting time!

Thanks for reading numinousexperience! Subscribe for free to receive new posts and support my work.

Hey Nicole, the link to Volt Labs' research roles isn't open and when you go to the careers page there's only an opening for a principal role